“The eye sees only what the mind is prepared to comprehend.” — Robertson Davies.

If you think women are bad drivers, you are more likely to notice driving mistakes made by women. A detective who is convinced a suspect is guilty is more likely to pay attention to evidence corroborating their intuition. These are examples of confirmation bias.

While many of us pride ourselves in our objective thinking, the reality is that we humans are terrible at evaluating situations and predicting outcomes based on facts only. Confirmation bias is our tendency to seek, interpret, favour, and remember information in a way that confirms our prior hypotheses or personal beliefs.

It’s a very common type of cognitive bias, which is even stronger for emotionally charged topics and deeply ingrained beliefs. The more we desire a specific outcome or believe in a specific principle, the more likely we are to search for confirming evidence.

The confirmation bias in action

The term “confirmation bias” was coined by Peter Cathcart Wason, the cognitive psychologist who pioneered the psychology of reasoning—the study of how people solve problems and make decisions. In the 1960’s, Wason conducted a series of experiments to study our tendency to confirm our existing intuitions or beliefs by ignoring disconfirming evidence.

The first one Wason designed is called the 2-4-6 task. In this experiment, participants were told the experimenter had a secret rule in mind, and to complete the following sequence correctly by generating further sequences of triples respecting that rule: 2, 4, 6…

Here is an example of how it would often go:

- Exp. “Please complete the following sequence: 2, 4, 6…”

- Par. “8, 10, 12.”

- Exp. “Correct.”

- Par. “14, 16, 18.”

- Exp. “Correct. Do you think you guessed the rule?”

- Par. “Yes, it’s an increment of 2.”

Do you see the problem? The participant never tested for rules that would refute their initial intuition. If they had tried “7, 13, 24”, the experimenter would have also replied “correct”, because the actual rule of the 2-4-6 task is “any ascending number.”

But most participants in this experiment tended to go for more specific rules—another common one was multiples of the initial numbers (“10, 20, 30” for instance). Try this experiment with friends and you will probably get a similar result across the board.

A few years later, Wason created another test which has become to be known as the Wason selection task. It’s considered one of the most famous tasks in the study of deductive reasoning. In this experiment, participants are presented with four cards, and given a rule by the experimenter. The participants were then asked to choose just cards to determine if the rule given to them by the experimenter was true or false.

While the original task used numbers (even, odd) and letters (vowels, consonants), here is an example where the participant is shown a set of four cards placed on a table, each of which has a number on one side and a colour on the other side. The visible faces of the four cards show 7, 6, blue and yellow.

The experimenter would say: “Which card or cards should you turn over in order to test the truth of the proposition that if a card shows an even number on one face, then its opposite face is blue?” Take a few seconds to think about it before reading the solution.

The correct response is to turn over the 6 card and the yellow card. If you got it wrong, don’t worry, most people do.

- If the 6 card is not blue, it violates the rule.

- If the yellow card is even, it violates the rule.

- Whatever the number of the blue card (odd or even), it doesn’t violate the rule.

- Whatever the color of the 7 card (blue or yellow), it doesn’t violate the rule.

While the rule says “If the card shows an even number on one face, then its opposite face is blue,” we tend to create a more specific rule in our mind by assuming “If the card shows an odd number on one face, then its opposite face is yellow,” which was never mentioned by the experimenter. The rule makes no claims about odd numbers, and the experimenter never said blue was exclusive to even numbers.

Once we made this automatic assumption, we then flip cards to confirm that belief, instead of objectively thinking about the best cards to turn over to verify the more restrictive rule stated by the experimenter.

These may seem like fun games conducted in a laboratory, but we fail prey to the confirmation bias on an everyday basis—both in our work and in our personal life.

Why we fall prey to the confirmation bias

In The Web of Belief, which was published in 1970, Willard Van Orman Quine, who is considered one of the most influential philosophers of the twentieth century, wrote: “The desire to be right and the desire to have been right are two desires, and the sooner we separate them the better off we are. The desire to be right is the thirst for truth. On all counts, both practical and theoretical, there is nothing but good to be said for it. The desire to have been right, on the other hand, is the pride that goeth before a fall. It stands in the way of our seeing we were wrong, and thus blocks the progress of our knowledge.”

The most basic explanation for the confirmation bias is wishful thinking—where we make decisions and formulate our beliefs based on what feels pleasing to imagine, rather than on actual evidence. Wishful thinking is closely related to another cognitive bias: optimism bias, where people show unrealistic optimism—a bias so common it has even been reported in rats and birds.

The simplest way to formulate how wishful thinking works is: “I wish that X were true/false; therefore, X is true/false.” In the case of the confirmation bias, the fallacy is not as clear-cut and explicit, and would rather be formulated as: “I think that X is true/false; therefore, I will find evidence to confirm X is true/false.”

Another explanation is simply the limited human capacity to process information, which is linked to the concept of cognitive load. In cognitive neuroscience, our cognitive load refers to the amount of working memory resources being used. For instance, for most educated adults, solving a complicated equation has a higher cognitive load than reciting the multiplication table.

Because it requires so much energy to function, our brain is lazy—it often tries to go for the processing approach that requires the lowest cognitive load possible. In many cases, this results in following our first instinct instead of taking a step-by-step, more tedious but more objective approach to problem-solving.

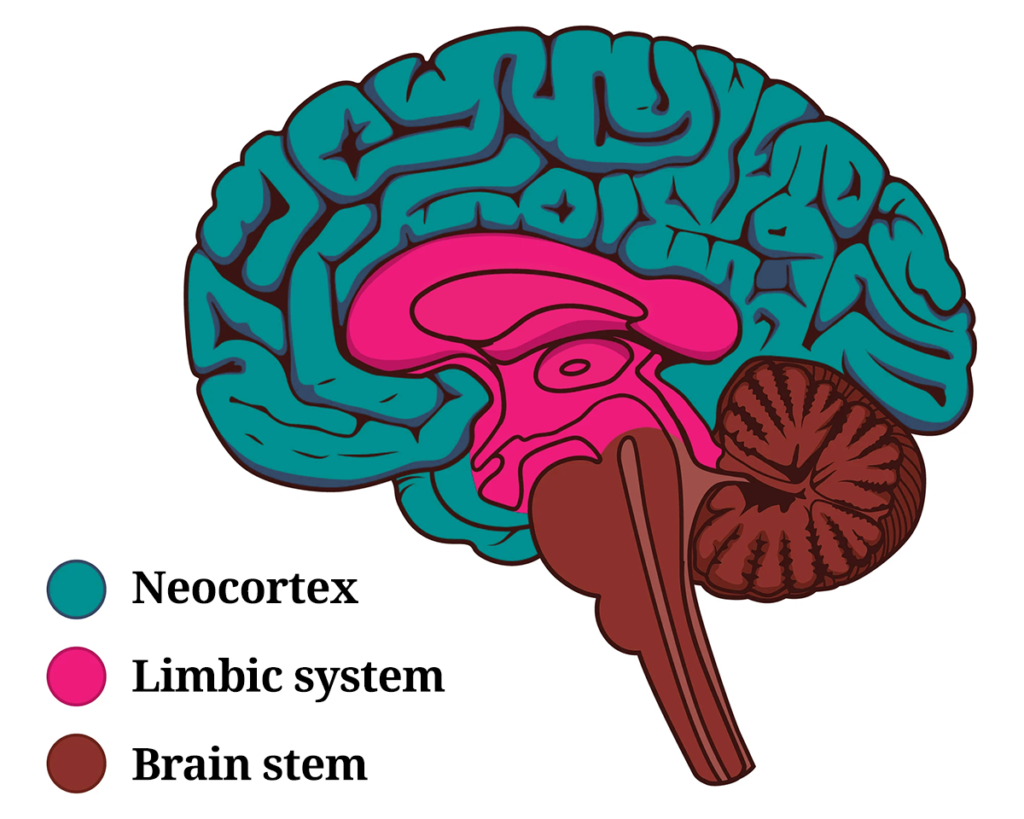

Especially when we’re under pressure, our brain will tend to make mental shortcuts to save energy. It makes sense from an evolutionary perspective. If you look at a simplified (oversimplified) picture of the brain, there are roughly three main layers.

The one at the very top, the prefrontal lobes or neocortex, is where most of the thinking happens. It’s the part of the brain that allows us to think, calculate, communicate through written words and the spoken language. Below lies the limbic system, sometimes called the emotional brain. This is the part of the brain that gets activated when you are feeling anxious, or when you are feeling loved. And finally, the oldest part of the brain, the brain stem, which is often called the reptilian brain, is where our stress response, or fight or flight response happens.

The primordial version of our brain was built for quick decision making—for survival. When a lizard hears a noise or sees something moving, it just disappears straight away. The lizard doesn’t stop to think: “What was that? Is it really dangerous? Should I move or stay put for now?” Instead, it has an automatic reaction.

Fortunately, they are not usually as extreme in humans, but we still have some of these automatic reactions embedded in our decision-making processes, and the confirmation bias is one such example of a thinking shortcut where our brain skips part of the information processing to get faster to a decision to act upon.

Finally, another common explanation is that we show confirmation bias because we are weighing up the costs of being wrong, rather than investigating problems in a neutral, scientific way. (You would think that being a scientist would somewhat protect you from the confirmation bias. However, research shows that even scientific researchers are prone to the confirmation bias. As Warren Buffet put it: “What the human being is best at doing is interpreting all new information so that their prior conclusions remain intact.”)

Confirmation bias has indeed been extensively studied in relation to motivated reasoning—which is when we make decisions that are most desired rather than those that accurately reflect the evidence. Social psychologist Ziva Kunda found the “tendency to find arguments in favor of conclusions we want to believe to be stronger than arguments for conclusions we do not want to believe.”

All in all, the confirmation bias can almost always be explained by one of the following three following mistakes we often make:

- Biased search for information. As Wason experiments show, this is when we tend to test hypotheses in a one-sided way, ignoring disconfirming evidence.

- Biased memory. We tend to remember evidence selectively to reinforce our expectations, which is called “selective recall” or “confirmatory memory.”

- Biased interpretation. Finally, even if two people have the same information, the way they interpret it can be biased based on our personal beliefs.

“The confirmation bias is so fundamental to your development and your reality that you might not even realize it is happening. We look for evidence that supports our beliefs and opinions about the world but excludes those that run contrary to our own.” — Sia Mohajer, The Little Book of Stupidity.

Confirmation bias and illusory correlations

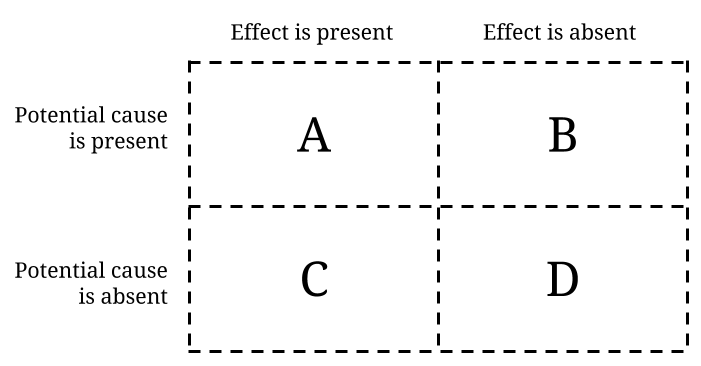

It would be hard to talk about confirmation bias without mentioning illusory correlations, which are a major source of confirmation bias in action. The term illusory correlation describes our tendency to overestimate relationships between two variables even when no such relationship exists. We often use illusory correlations to confirm our existing biases.

“Persons believing in extrasensory perception will keep close track of instances when they were ‘thinking about Mom, and then the phone rang and it was her!’ Yet they ignore the far more numerous times when (a) they were thinking about Mom and she didn’t call and (b) they weren’t thinking about Mom and she did call. They also fail to recognize that if they talk to Mom about every two weeks, their frequency of ‘thinking about Mom’ will increase near the end of the two-week-interval, thereby increasing the frequency of a ‘hit.’” — C. James Goodwin in Research in Psychology: Methods and Designs.

These illusory correlations are the result of two further biases: attentional bias and interpretation bias. Yes, it’s turtles all the way down. These are pretty self-explanatory, but attentional bias is our tendency to pay attention to some things while simultaneously ignoring others. In the above example, the person pays attention to the times Mom called, ignoring the ones she didn’t. Interpretation bias is when we inappropriately analyse ambiguous events. In this case, there could be many reasons why Mom called when we were thinking about her—it’s an ambiguous event—but we choose to make it clearer than it is.

Another popular example is how Dr. David Mandell of the Children’s Hospital in Pittsburgh found that 69% of surgical nurses in his study believed that a full moon led to more chaos—and hospital admissions that night. Chaos and no full moon? Nothing to talk about. Chaos and full moon? There must be a correlation. Add confirmation bias to this illusory correlation, and you will soon hear nurses say: “No wonder it’s chaos—there’s a full moon tonight!”

As you can see, these are similar to the biased search for information, selective memory, and the distorted interpretation we see in the confirmation bias. Beyond illusory correlations, there are many areas of our lives and societies that are unfortunately dictated by our confirmation bias.

Confirmation in action

The confirmation bias is everywhere around you. Here are some areas where you will be able to easily spot instances of confirmation bias in your daily life.

- Religious faith. Disciples of any religion tend to see everyday occurrences as proof of their religious convictions: tragedies may be seen as tests of faith, while positive events may be seen as miracles. On the other hand, atheists may see tragic events as a confirmation of their lack of faith.

- Eyewitness accounts. Confirmation bias is considered by criminal justice experts a key reason for wrongful convictions. For instance, if you see a dog attack a child, your interpretation—was it a vicious attack or was the dog defending itself?—will be biased based on whether you are a dog lover or not.

- News and information. We tend to re-share information we agree with. The problem? Our confirmation bias will tend to make us consume information which is already aligned with our personal convictions. This is a vicious cycle which is reinforced by the silos created by social media.

- Political parties. These can be seen as institutionalised confirmation bias. Instead of seeking diverse views to solve national and global problems, they encourage seeking information within a group of people with the same views and beliefs.

- Workplace diversity. This is a complex topic and confirmation bias is certainly not the only reason for the lack of diversity in certain industries, but it certainly has an impact. For instance, the myth of the confidence gap between men and women may have led to self-fulfilling prophecies leading either women to be passed on promotions, or to pass on promotions themselves.

Not to mention the wider impact cognitive bias has on our society, with prejudice, discrimmination, and extreme political partisanship often rooted in our natural tendency to confirmation bias. This trend may be made worse by the fact that many western cultures have a “just do it” approach to life which doesn’t reward slow thinkers.

NB. This article by Shane Parrish states that the fact we better enjoy predictable music is an example of confirmation bias. However, recent research suggests that our enjoyment of pop music classics is actually linked to a combination of uncertainty and surprise.

Unclouding our judgement

We would all like to think we are open-minded. But the confirmation bias often clouds our judgement and narrows our curiosity. Avoiding the confirmation bias is mostly about keeping a curious mind and questioning your automatic assumptions.

As with many cognitive biases, there’s no magic formula, and avoiding them requires self-awareness and conscious training. All the same, here are ten questions to ask yourself next time you are reading or hearing a story and want to avoid falling prey to the confirmation bias.

- Did I read the whole story or did I jump to a conclusion based on a quick skim?

- What made me believe this story?

- Which parts of the story did I automatically agree with?

- Which parts did ignore or skim over?

- Did this story confirm any of my existing beliefs? Which ones?

- How many acquaintances do I have with the same belief?

- Is this story from a trustworthy source?

- What percentage of my information comes from this source?

- What would it mean if I was wrong?

- Which facts would someone use to argue I am wrong?

Being aware of your own confirmation bias is far from easy, but it’s worth it if you want to better understand the world, make better decisions, and have better relationships both at work and in your personal life. Be more wrong in the short term, and you’ll be more right in the long term.

If you want to learn more about cognitive biases, read the Cognitive Biases in Entrepreneurship research report, which is a 45-page review of the latest research in cognitive biases and how they affect entrepreneurs.